As we at Atlan started to use big data (massive data from hundreds of sources), we quickly found that we needed to move everything to the cloud. With data sizes in TBs, we couldn’t keep a local copy of our data for analysis, so we needed to find a way to directly interact with data stored in the cloud for our scripts without disrupting the current workflow.

As the data science world is moving towards cloud computing and storage, a bunch of tools and support have come up. With Amazon Web Service (AWS) and Google Cloud Platform (GCP) leading the development and innovation in this space, the battle between their two cloud storage providers, Amazon Simple Storage Service (Amazon S3) and Google Cloud Storage (GCS), continues. We wanted to try out both, while still keeping the option for reading and writing files from our local systems too. This would give us the flexibility to use one interface for all our read and write operations within our R scripts, which power part of our data products at SocialCops.

To solve for this, we started to look for various options available; there are a few, which are wrappers on top of the APIs (Application program interfaces) provided by AWS and GCP, but none that we could use as a single way of data input and output. This is when we decided to build flyio and solve this problem — reading and writing data from Amazon S3, GCS or a local system with a single change in parameter. The awesome libraries created by the cloudyr project were super helpful for us to pull this off.

We are pleased to share that we are announcing flyio, an open source R package as an interface to interact with data in the cloud or local storage! You can check out the documentation and follow the development on GitHub.

We aim to make flyio functions as the default read and write functions for any format in R. flyio also gives the end-user the flexibility to specify the function name they want to read or to write the data. Even if you haven’t moved to cloud storage yet, it would be a good idea to start using flyio today so that you will only need to do minimal changes to your scripts once you move to any of the cloud storage platforms.

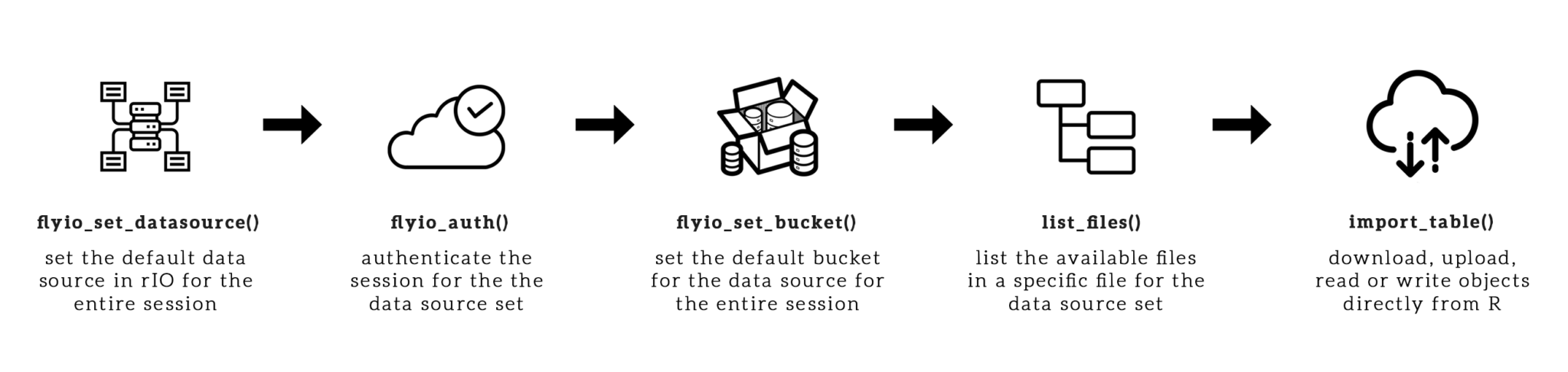

Overview of flyio

flyio provides a common interface to interact with data from cloud storage providers or local storage directly from R. It currently supports AWS S3 and Google Cloud Storage. flyio supports reading or writing tables, rasters, shapefiles, and R objects to the data source from memory.

flyio_set_datasource(): Set the data source (GCS, S3 or local) for all the other functions in flyio.flyio_auth(): Authenticate data source (GCS or S3) so that you have access to the data. In a single session, different data sources can be authenticated.flyio_set_bucket(): Set the bucket name once for any or both data sources so that you don’t need to write it in each function.list_files(): List the files in the bucket/folder.file_exists(): Check if a file exists in the bucket/folder.export_file(): Upload a file to S3 or GCS from R.import_file(): Download a file from S3 or GCS.import_[table/raster/shp/rds/rda](): Read a file from the set data source and bucket from a user-defined function.export_[table/raster/shp/rds/rda](): Write a file to the set data source and bucket from a user-defined function.

How to install flyio

Installing flyio is super easy!

In case you encounter a bug, please file an issue with steps to reproduce it on Github. You can also use the same for any feature requests, enhancements, feedback or suggestions.

Example

You can check out the GitHub repository for more information. As we continue to improve and scale flyio, your contribution will add a great value to this project.

References

- Cloudyr GCS wrapper: https://github.com/cloudyr/googleCloudStorageR

- Cloudyr S3 wrapper: https://github.com/cloudyr/aws.s3

Photo by Fancycrave.com from Pexels

6 Comments

Pingback: New top story on Hacker News: Announcing flyio, an R package to interact with data in the cloud – Latest news

Pingback: New top story on Hacker News: Announcing flyio, an R package to interact with data in the cloud | World Best News

Pingback: New top story on Hacker News: Announcing flyio, an R package to interact with data in the cloud – Golden News

Pingback: SD Times Open-Source Project of the Week: flyio - SD Times

Pingback: Cloud Computing: Announcing flyio, an R kit to bear interplay with records within the cloud – FutureTechRumors

Pingback: SD Times Open-Source Project of the Week: flyio • Cloud Ethernet